鎮(zhèn)江明鑫機(jī)床有限公司

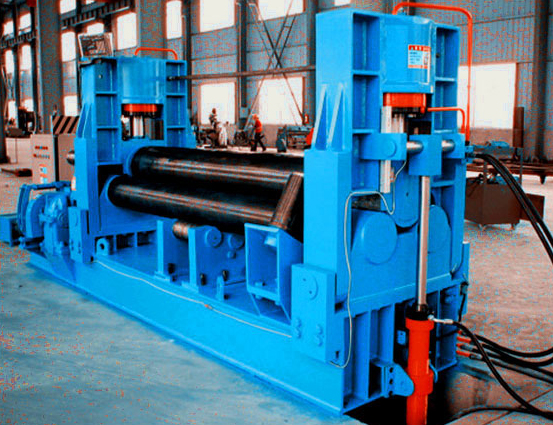

本公司是原中國(guó)機(jī)床總公司定點(diǎn)生產(chǎn)彎卷、矯、剪折、型彎?rùn)C(jī)床的專(zhuān)業(yè)公司。位于江南古城鎮(zhèn)江,具有從事三十年鍛壓機(jī)械生產(chǎn)的經(jīng)驗(yàn),技術(shù)成熟,有一批置力于彎卷矯正剪折型彎設(shè)備研究的市級(jí)技術(shù)人員,公司目前主要產(chǎn)品有:卷板機(jī)(三輥卷板機(jī))、矯平機(jī)、剪板機(jī)折彎?rùn)C(jī)、型彎?rùn)C(jī)等。

上述產(chǎn)品廣泛應(yīng)用于石油、化工、鍋爐、風(fēng)電塔筒

、造船、水泥、航空等機(jī)械設(shè)備制造行業(yè)。公司全體員工以先進(jìn)的技術(shù)和現(xiàn)代化的管理手段,努力追求產(chǎn)品質(zhì)量,本著有利于客戶,服務(wù)社會(huì)的宗旨進(jìn)行生產(chǎn)、銷(xiāo)售和服務(wù)。產(chǎn)品使用遍布全國(guó),并出口到東南亞、中東地區(qū),廣受用戶好評(píng)。 ...【 查看更多 】